What is an AI agent?

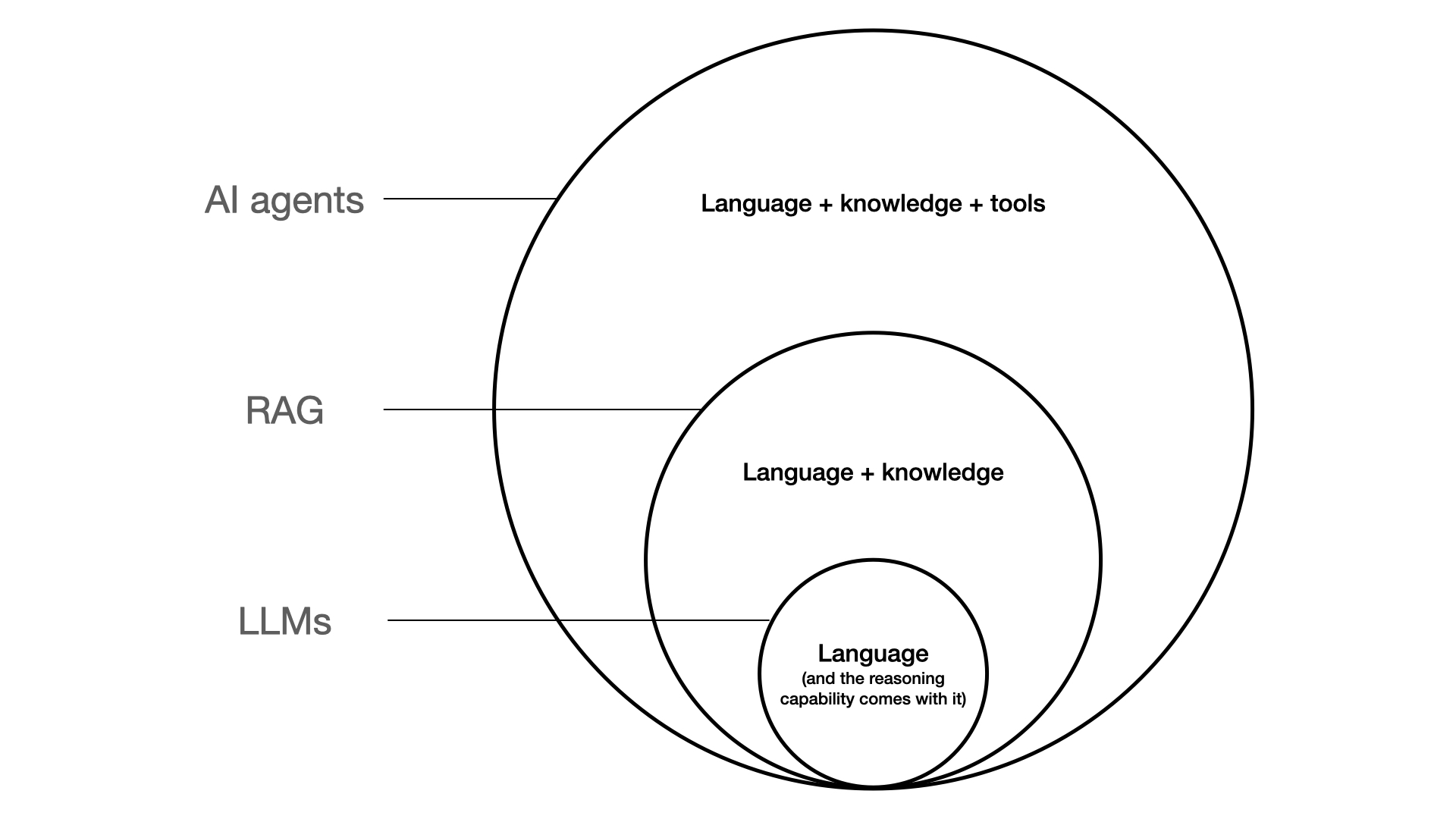

An illustration of different AI systems’ capabilities, where LLMs sit at the core.

Table of contents:

- What is an AI agent?

- Taking actions with tools

- Reasoning and planning

- Multi-agents

- Conclusion

What is an AI agent?

Previously we discussed what is Retrieval-Augmented Generation (RAG), and why RAG is a powerful tool for building useful applications with Large Language Models (LLMs) while reducing hallucination. Please refer to the previous blogs (part one, two, and three) for more details about RAG.

But RAG systems have some limitations. For example, a RAG system requires predefined knowledge base to retrieve information. And a RAG system can only provide outputs in texts (i.e., a chatbot) without the capacity of taking actions to actively interact with the real-world.

Imagine LLMs have access to a number of tools, such as searching the Internet, sending emails, or even generating and executing codes. Suddenly we can build AI applications to interact with the real-world and fully automate many workflows. An AI agent is an AI system which is capable of autonomously planning and taking actions by using different tools. In this blog, we will explain what is a LLM-based agent and discuss how it works.

Taking actions with tools

A defining feature of AI agents is that this kind of AI systems are capable of taking actions and interacting with the world with tools. A tool is essentially a software function with a description and a schema of inputs and outputs, such that a LLM can understand what is this tool and how to use it. Inside a tool, we could either perform operations locally (for example first generate a SQL query and then execute it) or call an external API from a third-party software (for example a search engine API).

Let’s check out LangChain’s documentation on tools and toolkits. I am impressed by the variety of tools and the amount of very useful applications we can build with those predefined tools. But wait … there’s more! It is actually relatively easy to define custom tools. Given most softwares and platforms today have APIs, AI could accomplish a lot by interacting with almost everything (if allowed to access) in the digital world, including databases, cloud platforms, and workplace softwares.

Here we will discuss three types of tools just as examples:

Search engines and websites

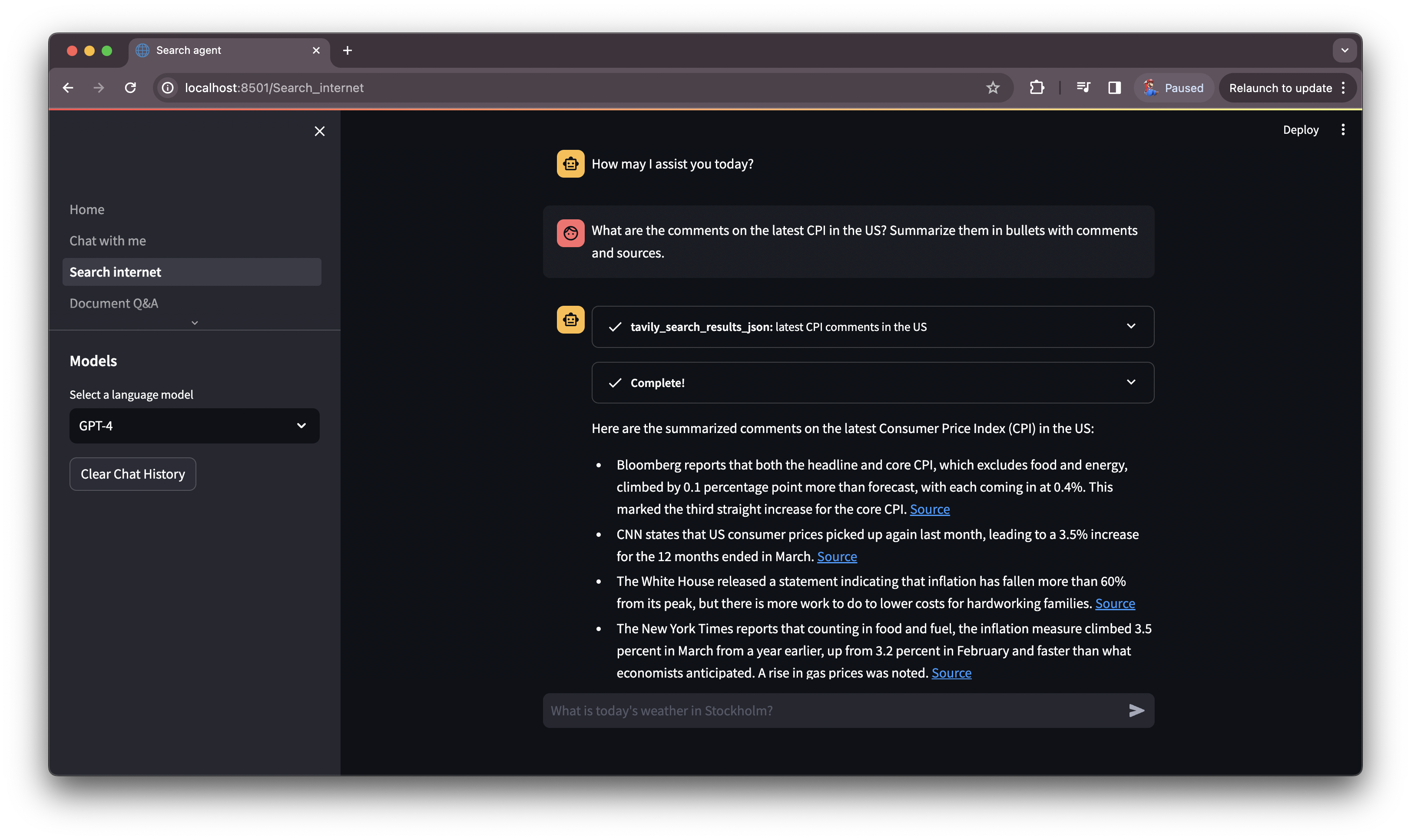

Tools such as Google Search, Bing Search, and Tavily Search allow LLMs actively search the Internet for relevant information and answer a user’s query. In comparison to RAG, a search agent does not have any predefined vector database, but instead relies on the outputs from the search tool to generate the answer.

There are also tools for searching from specific websites, such as ArXiv, PudMed, Wikipedia, and Yahoo Finance. Those tools are very useful when we know which domain knowledge are required for the application. For example, if we are building an AI assitant for scientific researchers, ArXiv and/or PubMed are the websites to get the relevant research papers; if the app is designed for business users, Yahoo Finance is likely to be used as the info source.

A search agent with Tavily Search and GPT-4.

Code generation and execution

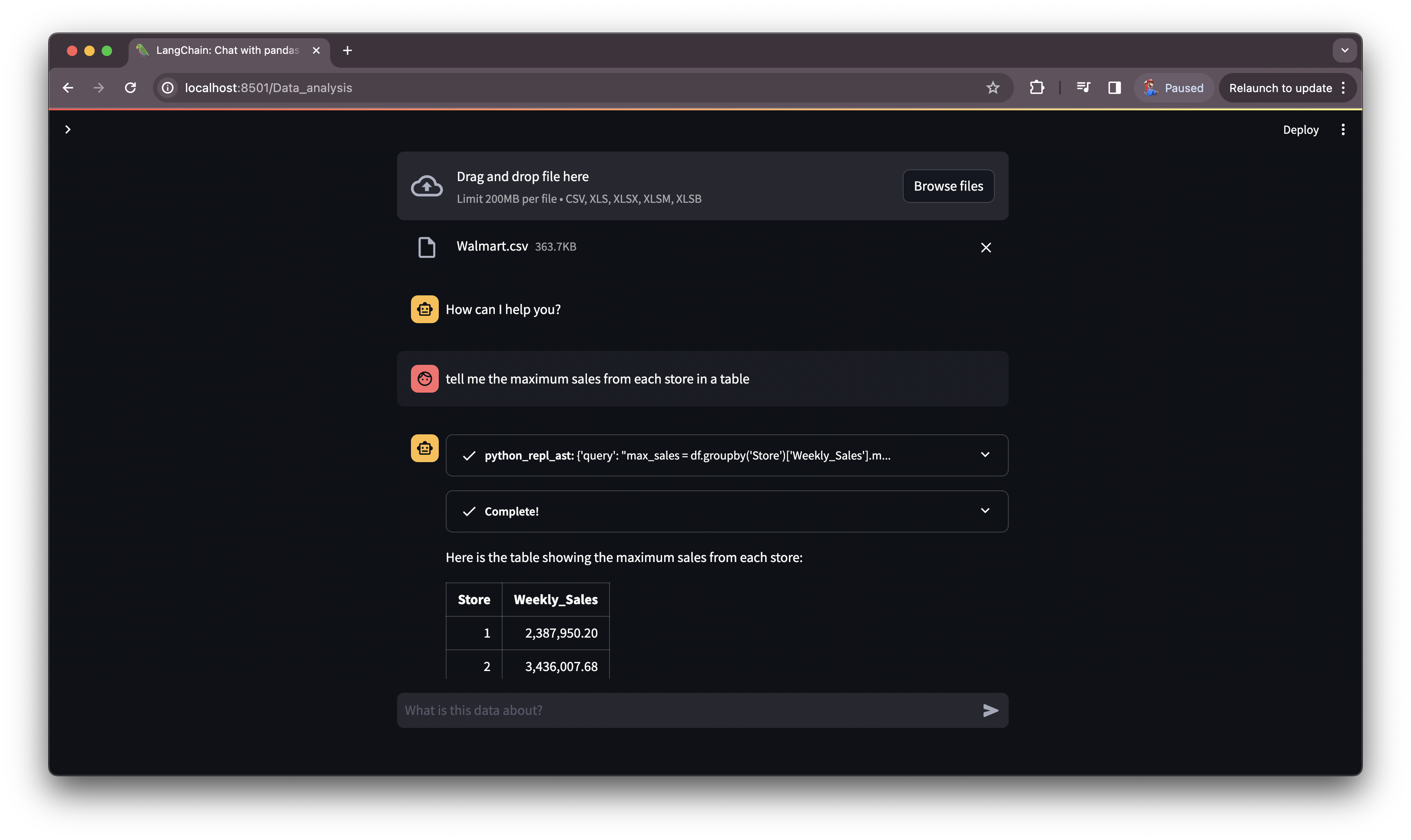

Another very powerful category of AI agents are code generators and executors. Those agents translate a user’s natural language query into codes (such as SQL, Python, JavaScript), execute the generated code, and finally use the returned results to answer the user’s queries or perform actions such as modifying data in a database.

However, there are potential security risks for using code generation and execution agents. For example, without a proper access control, an AI agent might access sensitive data in a database or even cause data corruption. AI generated code might also contains vulneralbilities. Proper code reviews and validations should be performed for AI generated codes.

A data agent which generates and executes Python code to analyze data and answer questions.

Workpalce and enterprise softwaress

AI agents can also use APIs to interact with workplace tools (e.g., Microsoft 365) and enterprise softwares (e.g., Salesforce). For example, an AI agent can read an incoming email from a customer, generate and send replies, and update the Salesforece record accordingly. This kind of AI agents can automate many workflows and save a lot of time for sales and customer support professionals. Two Python packages could be used to build this examplary AI agent: python-o365 and simple-salesforce.

Reasoning and planning

Now we have discussed different tools and what AI agents can do with those tools. A key question remains: how does a LLM reason about a complex task and take the right actions to solve the task? This is still an active research topic and much needed to be done. We will only discussed two most popular techniques: Chain-of-Thought (CoT) and ReAct.

CoT

“Chain of thought — a coherent series of intermediate reasoning steps that lead to the final answer for a problem”

In 2022, Wei et.al. published a research paper “Chain-of-thought prompting elicits reasoning in large language models” (NIPS). In this paper, the authors introduced a prompting technique for LLMs to perform step by step intermediate reasoning. There are several variations of CoT: zero-shot CoT, few-shot CoT, self consistency CoT (Wang et.al.), and Tree-of-Thought (Yao et.al.).

The zero-shot CoT can be as simple as just adding a system message such as “Think step by step”, while few-shot CoT provide a few examples of how to reason a complex problem to the LLM. Self-consistency is achieved by sample multiple CoT outputs and select the most consistent answer. Finally, ToT uses one LLM to generate a tree of thoughts from intermediate steps, and uses another LLM to evaluete each of those thoughts. The answer is obtained by selecting the most likely path in the tree of thoughts.

ReAct

“Explore the use of LLMs to generate both reasoning traces and task-specific actions in an interleaved manner”

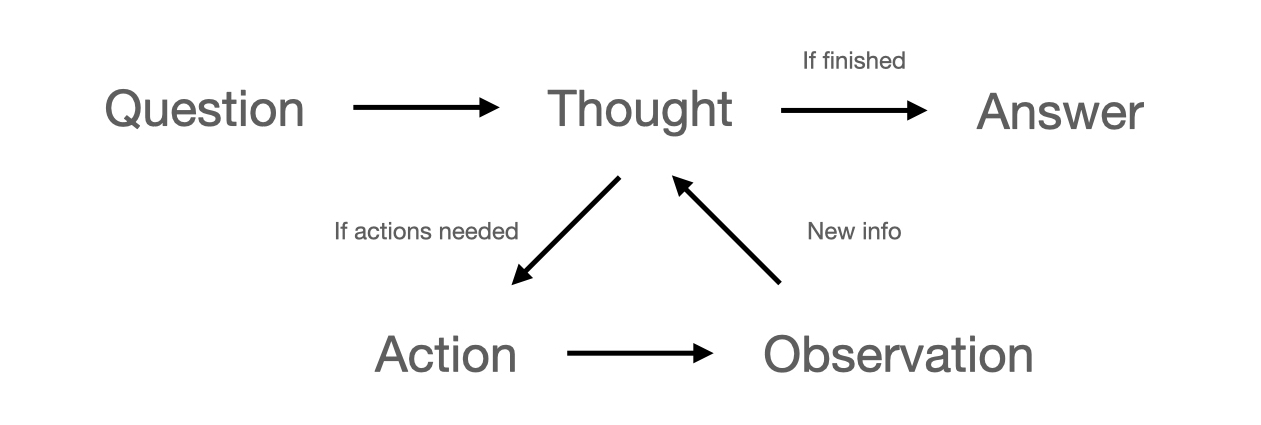

In 2023, Yao et.al. published the paper “ReAct: Synergizing Reasoning and Acting in Language Models” (ICLR). Comparing to CoT, ReAct allows LLMs to take actions in intermediate steps, observe the outputs, and then reason about the next steps until the problem is solved or an error is raised. Most ReAct agents also utlize various CoT techniques in the “Thought” step. Below is an illustration of how a ReAct agent works.

A ReAct agent conduct mutiple iterations of actions and reasoning until the problem is solved.

Beyond CoT and ReAct, there are many ongoing studies aiming to further improve AI agents’ reasoning capabilities. Personally I believe there are two ways to improve AI’s performance in solving complex and dynamic tasks:

- Enhance a single LLM’s reasoning capability;

- Create multiple agents to collaboratively solve a complex problem. A segue into the next section!

Multi-agents

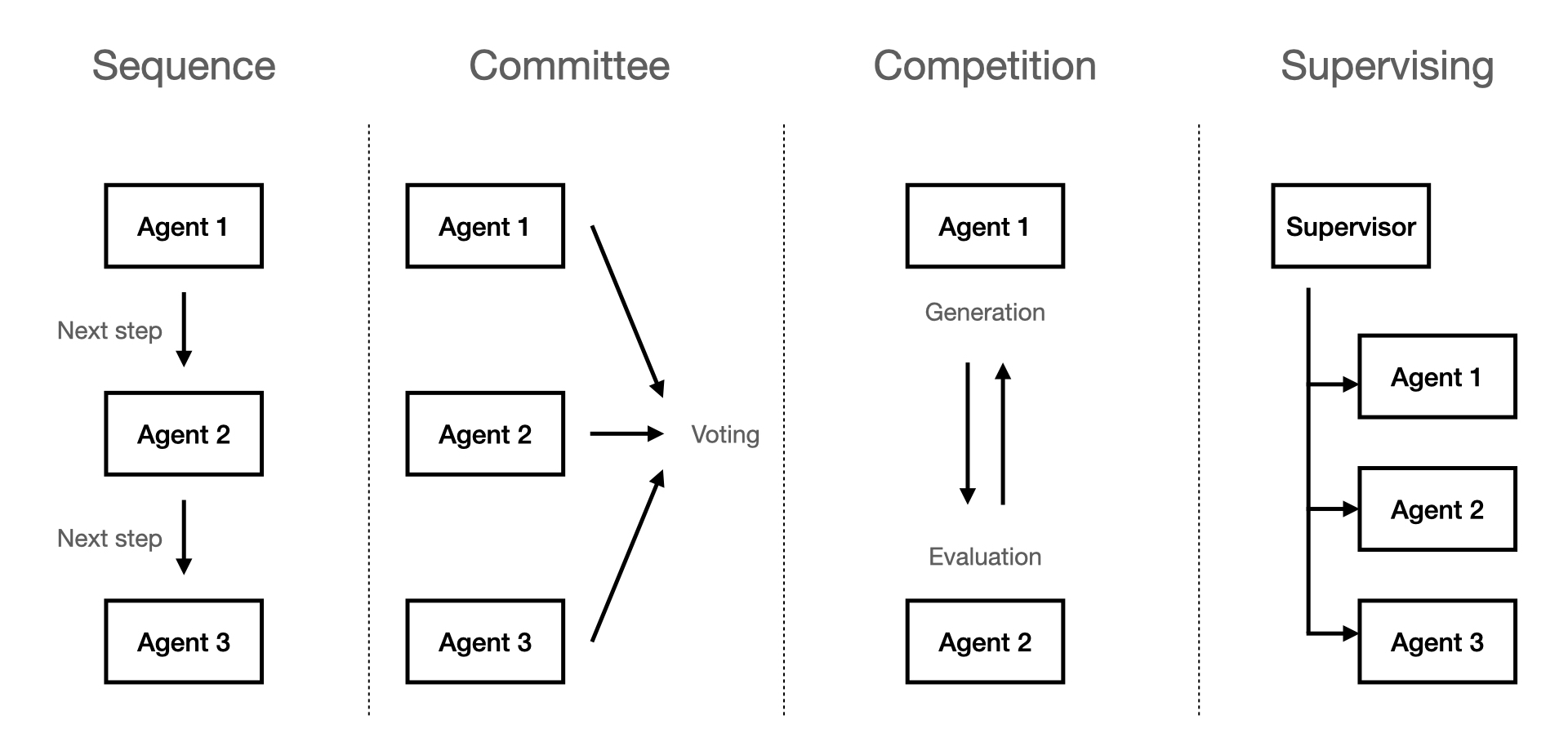

Just like we human work in teams, multiple AI agents can also work together to solve problems. There are different ways for AI agents to work together.

Sequential workflows

In this setup, agents work in sequential workflows. For example, Agent 1 is responsible for parsing customer support request and drafting report, Agent 2 assits human experts to generate a solution to solve the customer’s problem, Agent 3 drafts a personal email based on the solution and sends back to the customer.

Committee of agents

In machine learning, ensemble learning and comittee machine have been well-known methods for a long history. Instead of relying on the output from one model, the comittee of agents will gather outputs from multiple models (could be multiple instances of the same LLM, or different LLMs) and produced a combined answer via techniques such as majority voting.

Competition

For use cases such as debating on legal issues and software vulneralbility assessment, two agents will simulate a game and try to exploit each other’s mistakes or improve outputs from themselves.

Supervising

In this setup, a LLM is playing as the supervisor and distribute tasks to a team of agents with different tools. For example, for the market research use case, the supervisor might break down the task into data collection, analysis, and report drafting. Then the supervisor will distribute those tasks to the team of agents and validate the outputs.

An illustration of different multi-agents systems.

Conclusion

If you are still reading this blog, you might wonder what will hapeen when we live in a world full of AI agents and AI generated contents? I don’t dare to give any predictions. But I believe AI agents are already here. For example, a self-driving vehicle is a typical AI agent. Yes, the current LLM-based AI agents are still fairly limited in their reasoning capabilities. But with more powerful LLM models, AI agents are going to become better and beter very fast!

We are also witnessing the combination of LLMs, computer vision, and robotics. The combination of those technologies will lead to the creation of multi-modal AI agents in the physical world. For example, check out those amazing (or scary) robots presented at the 2024 GTC by NVIDIA CEO Jensen Huang. LLMs provide great interfaces for robots to understand human langauges and interact with human.

Finally, with great power comes great responsiblity. It is a cliché, but real for AI this time.

References

[1] Wei, Jason, Xuezhi Wang, Dale Schuurmans, Maarten Bosma, Fei Xia, Ed Chi, Quoc V. Le, and Denny Zhou. “Chain-of-thought prompting elicits reasoning in large language models.” Advances in neural information processing systems 35 (2022): 24824-24837.

[2] Yao, Shunyu, Jeffrey Zhao, Dian Yu, Nan Du, Izhak Shafran, Karthik Narasimhan, and Yuan Cao. “ReAct: Synergizing Reasoning and Acting in Language Models.” In International Conference on Learning Representations (ICLR). 2023.

[3] Wang, Xuezhi, Jason Wei, Dale Schuurmans, Quoc Le, Ed Chi, Sharan Narang, Aakanksha Chowdhery, and Denny Zhou. “Self-consistency improves chain of thought reasoning in language models.” arXiv preprint arXiv:2203.11171 (2022).

[4] Yao, Shunyu, Dian Yu, Jeffrey Zhao, Izhak Shafran, Tom Griffiths, Yuan Cao, and Karthik Narasimhan. “Tree of thoughts: Deliberate problem solving with large language models.” Advances in Neural Information Processing Systems 36 (2024).