What is Retrieval-Augmented Generation (RAG)? -- Part 2: Embedding

This image is generated by AI (DALL·E 3).

In case of potential copyright infringement, please let me know.

This is the first part of a three-part blog:

In the part one of this blog, we discussed why adding context will help LLMs generate better outputs. But we left a question there: how does AI find and use the right context in a long document?

The short answer is that AI will spilt the document into smaller chunks, then embed each chunk into a vector, finally find the vectors which are the closet to the embedded question. In the second part of this blog, we will explain what is embedding.

Transforming texts to vector representations

“An AI must fundamentally understand the world around us, and we argue that this can only be achieved if it can learn to identify and disentangle the underlying explanatory factors hidden in the observed milieu of low-level sensory data.”

The landmark paper “Representation learning: A review and new perspectives” by Bengio et.al. is arguably one of the most influential papers which set the foundation for today’s AI boom. Representation learning has become a key and foundamental technique for natural language processing (NLP), computer vision (CV), speech recognition and many other areas. In this paper, the authors defined representation learning as the following:

“… learning representations of the data that make it easier to extract useful information when building classifiers or other predictors.”

But how does representation learning related to our topic which is embedding texts? Well, embedding is learning representations of texts in a vector space, such that the vectors of similar texts have a closer distance. And as Bengio and his co-authors said, building classifiers or predictors become easier on top of the learned representations of the raw data. This is why AI-based image recognition (classification) and ChatGPT (predicting the next words) have become so successful – they are bulit based on deep representation learnings instead of raw data. For RAG, embedding is the key for AI to be able to find the most relevant contexts from a splited documents. Instead of directly finding similar raw texts (how do we even define similarity for texts?), RAG finds the vectors which are the closet to the vector which represents a user’s question.

In an even ealier and also very important paper “A neural probabilistic language model” by Bengio et.al., the authors stated:

“… instead of characterizing the similarity with a discrete random or deterministic variable (which corresponds to a soft or hard partition of the set of words), we use a continuous real-vector for each word, i.e. a learned distributed feature vector, to represent similarity between words. “

The above idea of using deep neural network to embed texts into vectors was one of Yoshua Bengio’s significant breakthourghs rewarded for the 2018 Turing Reward.

Techniques and models for embedding texts

In this section, we will give a short overview on the development of embedding texts using deep representation learning. This has been a large and popular research topic for the past years. In this blog, we can only briefly touch on some core ideas and milestones. For interested readers, I recommend the book Representation Learning for Natural Language Processing (open access) for a comprehensive understanding of this topic.

The early days: frequency based approaches

The early ideas of measuring importance of words using their frequencies in a sentence or a document date back to 1950s - 1970s, when the fundamental methods such as bag-of-words and term frequency-inverse docuemnt frequency (TF-IDF) were proposed. The bag-of-words method consider each document as a multiset (bag) of words and then count the frequency of each words in this document. TF-IDF is a more sophisticated frequency based approach where the frequency of each word (TF) is adjusted by the inverse frequency across all document (IDF). Intuitively, IDF is a quantification of each word’s information. For example, the word “the” appears very frequently and contains little information, while the word “Uppsala” appears much less frequent and has more information about what this document is talking about.

Frequency based approaches, especially TF-IDF, has a long history of being used in various text related applications including information retrieval. However, there are obvious limitations of those approches as they don’t catch the semantic similarity between different words.

Word2vec

In 2013, a group of researchers at Google published two research papers:

- Mikolov, Tomas, Kai Chen, Greg Corrado, and Jeffrey Dean. Efficient estimation of word representations in vector space,

- Mikolov, Tomas, Ilya Sutskever, Kai Chen, Greg S. Corrado, and Jeffrey Dean. Distributed representations of words and phrases and their compositionality,

which introduced neural network models for word representations in vector space. Among those researches, Jeff Dean is one of the most influential scientists in AI and now the Chief Scientist at Google, and Ilya Sutskever left Google and co-founded OpenAI in 2015. The above papers are behind one of the most widely applied word embedding tools called word2vec.

In the above papers, Mikolov et.al. proposed two mirrored model architectures, namely Continuous Bag-of-Words (CBOW) and Skip-gram. CBOW is a neural network predicts the current word based on its neighbours, while Skip-gram is a neural network predicts surrounding words given the current word. This approach embeds words into vector representations which capture the semantic relationships between words. In the first paper, the authors stated

“… we found that when we train high dimensional word vectors on a large amount of data, the resulting vectors can be used to answer very subtle semantic relationships between words …

… Word vectors with such semantic relationships could be used to improve many existing NLP applications, such as machine translation, information retrieval and question answering systems, and may enable other future applications yet to be invented. “

It is fasinating to notice that how the above findings echo with Yoshua Bengio’s remarks about representaion learning in seperate research efforts. Today, word2vec is still widely used in various NLP tasks such as topic modelling. Word2vec has also become integrated libraries of popular machine learning frameworks such as Gensim and TensorFlow.

Encoder-decoders

In parallel of representation learning for NLP, computer vision researchers have long adopted the idea of representation learning for image classification and generation. For example, in the 2011 paper Transforming Autoencoders, Geoffrey Hinton (another 2018 Turing Reward reciever) and his co-authors stated:

“… artifical neural networks should use local “capsules” that perform some quite complicated internal computations on their inputs and then encapsulate the results of these computations into a small vector of highly informative outputs.”

In 2013, Kingma et.al. published the paper Auto-Encoding Variational Bayes and proposed what is now know as the variational audo-encoder (VAE) model. This model has two components: a neural network which encodes the raw data (for example an image) to a probabilistic latent variable; and another neural network which decodes an sample from the latent variable to the data (a generated image). By jointly optimizing the encoder and decoder, we obtain not only a probabilistic distribution of the data in the latent space, but also a good image generator which can turn a sample from the latent space to a new image! The VAE model is a foundamental technique behind the popular image generator Stable Diffusion. Another notable generative model architecture in image processing is the Generative Adversarial Networks (GANs) by Ian Goodfellow et.al.. In this architecture, a pair of image generator and discriminator (seperates real and generated images) are jointly trained.

Coming back to the field of NLP, in 2014, Ilya Sustkever, Oriol Vinyals, and Quoc V. Le published a paper titled “Sequence to Sequence Learning with Neural Networks”. This paper and the seq2seq model are another major milestone in shaping today’s AI landscape. In short, the seq2seq model consists of two recurrent neural networks (RNNs), where the first network encodes the a sequence of words (for example an English text) to a vector of a fixed dimensionality and the second network decodes the vector to the target sequence (for example the translated text in Swedish).

A year later, in 2015, Dzmitry Bahdanau, KyungHyun Cho, and Yoshua Bengio introduced a encoder-decoder architecture with what later knows as the attention mechanism. This mechanism allows the decoder to put different weights (attentions) on different words of the input sequence for each word in the output sequence, which significantly improved the performance of encode-decoder models. After this paper, encoder-decoders using recurrent networks and attention mechanisms became the mainstream techniques in NLP for tasks such as translation.

Transformers, and the rest is history

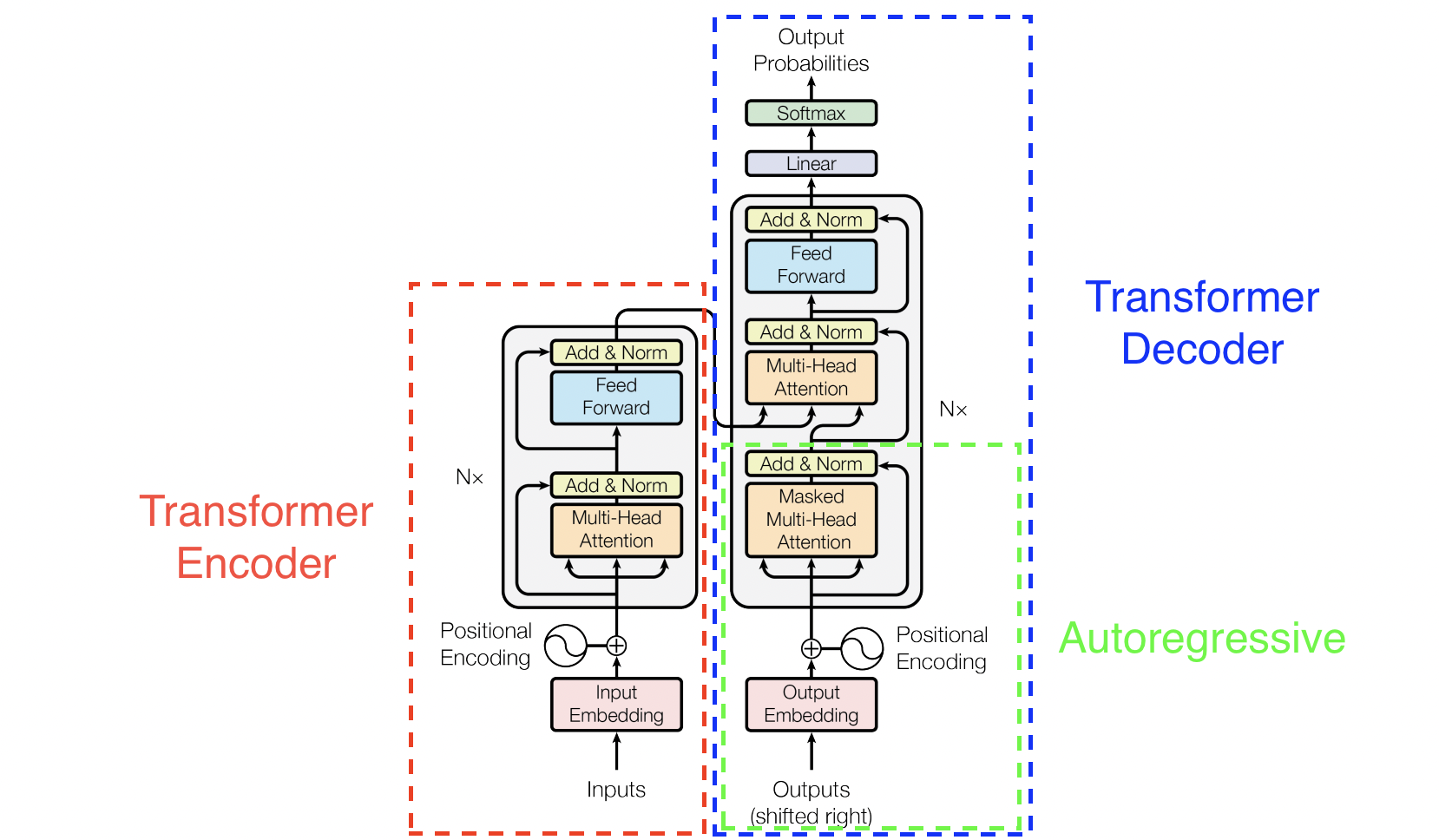

In 2017, researchers from Google published the paper “Attention is All You Need”. In this milestone paper, the authors proposed a new encoder-decoder architecure based on only attention mechanisms without using RNNs, namely the Transformer. There are three main characteristics of the Transformer architecure in the paper:

- Autoregressive: it uses the input and previous outputs for predicting the next output;

- Self-attnetion at three places: the encoder, the decoder, and the previous outputs.

- Only feed forwward networks are used. No recurrent or convolution networks.

The Transformer model ahieved better efficiency (in terms of computational complexity of training the model) and performance than best previously reported models in tasks such as translation. Since then, the Tansfomer has become the foundation of many popular large language models such as BERT (a Transformer encoder-only model) and GPT (a Transfomer decoder-only model).

The Transfomer architecure presented by Vaswani et. al.. The red part is the Transfomer encode; the blue part is the Transformer decode; and the green part is the autoregressive feature, meaning the model uses its own previous outputs for predict the next output.

Since then, the Tansfomer has become the foundation of many popular large language models such as BERT (a Transformer encoder-only model), GPT (a Transfomer decoder-only model), and LLaMA (a Transformer encoder-decoder). We omit the technical details and differences here. For interested readers, I highly recommend to read the original research papers.

A final word about OpenAI’s model for embedding. Since GPT is a decoder-only generative model rather than an embedding model, OpenAI has trained different models just for embedding. Details about those embedding models can be found on this OpenAI webpage.

Example: How many nations are there at Uppsala University?

Let’s revisit our RAG example from the previous post. In order for AI to retrieve the relevant information for building the context, we need to embed two texts:

- Embed the splited document (the Wikipage about Uppsala University) into vectors;

- Embed the question “How many nations are there at Uppsala University?” into a vector.

Using LangChain and Ollama, the embedding model can be defined with the following code:

from langchain_community.embeddings import OllamaEmbeddings

embeddings = OllamaEmbeddings(model="llama2")

We can then call this embedding model to embed texts into vectors. For example:

query_vector = embeddings.embed_query("How many nations are there at Uppsala University?")

The output (query_vector) is a vector of 4096 dimensions, which is the same size as the output sequence of LLaMA2. This vector will then be used to calculate the cosine similarity with vectors of the splited documents for retrieving the most relevant information.

References

[1] Bengio, Yoshua, Aaron Courville, and Pascal Vincent. “Representation learning: A review and new perspectives.” IEEE transactions on pattern analysis and machine intelligence 35.8 (2013): 1798-1828.

[2] Bengio, Yoshua, Réjean Ducharme, and Pascal Vincent. “A neural probabilistic language model.” Advances in neural information processing systems 13 (2000).

[3] Liu, Zhiyuan, Yankai Lin, and Maosong Sun. Representation learning for natural language processing. Springer Nature, 2023.

[4] Mikolov, Tomas, Kai Chen, Greg Corrado, and Jeffrey Dean. “Efficient estimation of word representations in vector space.” arXiv preprint arXiv:1301.3781 (2013).

[5] Mikolov, Tomas, Ilya Sutskever, Kai Chen, Greg S. Corrado, and Jeff Dean. “Distributed representations of words and phrases and their compositionality.” Advances in neural information processing systems 26 (2013).

[6] Hinton, Geoffrey E., Alex Krizhevsky, and Sida D. Wang. “Transforming auto-encoders.” In Artificial Neural Networks and Machine Learning–ICANN 2011: 21st International Conference on Artificial Neural Networks, Espoo, Finland, June 14-17, 2011, Proceedings, Part I 21, pp. 44-51. Springer Berlin Heidelberg, 2011.

[7] Kingma, Diederik P., and Max Welling. “Auto-encoding variational bayes.” arXiv preprint arXiv:1312.6114 (2013).

[8] Goodfellow, Ian, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, and Yoshua Bengio. “Generative adversarial networks.” Communications of the ACM 63, no. 11 (2020): 139-144.

[9] Sutskever, Ilya, Oriol Vinyals, and Quoc V. Le. “Sequence to sequence learning with neural networks.” Advances in neural information processing systems 27 (2014).

[10] Bahdanau, Dzmitry, Kyunghyun Cho, and Yoshua Bengio. “Neural machine translation by jointly learning to align and translate.” arXiv preprint arXiv:1409.0473 (2014).

[11] Vaswani, Ashish, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N. Gomez, Łukasz Kaiser, and Illia Polosukhin. “Attention is all you need.” Advances in neural information processing systems 30 (2017).

[12] Devlin, Jacob, Ming-Wei Chang, Kenton Lee, and Kristina Toutanova. “Bert: Pre-training of deep bidirectional transformers for language understanding.” arXiv preprint arXiv:1810.04805 (2018).

[13] Improving language understanding with unsupervised learning: https://openai.com/research/language-unsupervised

[14] Touvron, Hugo, Thibaut Lavril, Gautier Izacard, Xavier Martinet, Marie-Anne Lachaux, Timothée Lacroix, Baptiste Rozière et al. “Llama: Open and efficient foundation language models.” arXiv preprint arXiv:2302.13971 (2023).

[15] Neelakantan, Arvind, Tao Xu, Raul Puri, Alec Radford, Jesse Michael Han, Jerry Tworek, Qiming Yuan et al. “Text and code embeddings by contrastive pre-training.” arXiv preprint arXiv:2201.10005 (2022).

[16] Kusupati, Aditya, Gantavya Bhatt, Aniket Rege, Matthew Wallingford, Aditya Sinha, Vivek Ramanujan, William Howard-Snyder et al. “Matryoshka representation learning.” Advances in Neural Information Processing Systems 35 (2022): 30233-30249.