What is MCP?

Table of contents:

- Background

- What is MCP?

- Let’s look at an example

- Discussion

Background

New models, ideas and concepts are popping out from the AI world on weekly basis. This time is the Model Context Protocol (MCP). The concept was introduced by Anthropic – a competitor of OpenAI and the creator of the AI model Claude. Following the Anthropic’s first announcement in November 2024, MCP did not attract wide attention immediately. However, during the recent months, MCP become a very popular concept and created a lot of buzz in the tech community.

So what is MCP? “Think of MCP like a USB-C port for AI …”

Stop! This analogy does NOT help at all and only create more confusion (at least for me). So I need to read the tech doc a bit more and perhaps try to run some example codes myself to try understand what MCP really is. This is the intention of this blog.

But before we dive into MCP, let’s take one step back and talk about why MCP is popular and perhaps important. A big motivation for MCP is the booming of AI agents. Exactly one year ago, I wrote blogs about AI agents (and RAG). Essentially, AI agents are foundation models connected with different tools (APIs). When there are more and more tools made available for AI and agentic solutions become increasingly complicated, people start to wonder if there should be a standard way for AI models to interact with different tools. And that’s the core idea of MCP.

What is MCP?

So what really is MCP?

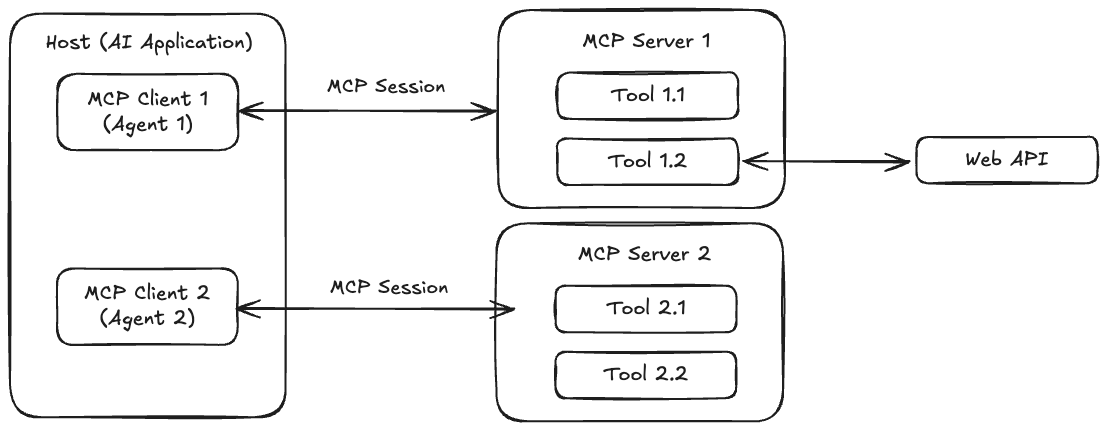

Essentially MCP is a framework of how AI models interact with tools, such as databases, web service, and local softwares. MCP defines the following concepts (with the original words in the documentation):

-

Hosts are LLM applications that initiate connections

-

Clients maintain 1:1 connections with servers, inside the host application

-

Servers provide context, tools, and prompts to clients

For example, let’s implement an agentic chatbot with access to several tools including an API to a weather forecast and alarm service. The host is this chatbot application. For a more complex application, there could be multiple agents working together.

Within the AI application, a MCP client initials the connection towards to a MCP server. This MCP client could be one AI agent. In the simple example of weather service chatbot, the AI application only contains one agent. Therefore we could say in this case the AI application is just a MCP client.

The MCP server is a server which provides one or multiple tools. Other than the tools, the MCP server can also provide resources (files) and prompt templates. Very often, a tool in the MCP server could be an API towards to a web service which is on the other side of Internet. However, based on what I understand, the MCP server is usually runs locally at where the AI application is deployed.

But I don’t know why MCP defines 1:1 connection between a client and a server. What if I have multiple applications which want to access the same tool? Do I need to create multiple MCP server processes? Certainly, it would be much hard to design and implement a robust and high performing MCP server which can handles multiple clients. But I think it probably would be the future.

My understanding of MCP based on the core architecture in the documentation.

Let’s look at an example

OK that’s about enough theoretical stuff we need. Let’s look at the quick start example of MCP client and server. In this example, a weather alarm and forecast chatbot is implemented.

MCP Server

The MCP Python SDK provides a class named FastMCP which provides methods such as mcp.resource(), mcp.tool(), and mcp.prompt().

In this weather service example, the MCP server provides two tools, get_alerts and get_forecast. Both tools use the National Weather Service API provided by the US Federal Government.

@mcp.tool()

async def get_alerts(state: str) -> str:

"""Get weather alerts for a US state.

Args:

state: Two-letter US state code (e.g. CA, NY)

"""

# Omit the implementation for simplicity ...

return "\n---\n".join(alerts)

@mcp.tool()

async def get_forecast(latitude: float, longitude: float) -> str:

"""Get weather forecast for a location.

Args:

latitude: Latitude of the location

longitude: Longitude of the location

"""

# Omit the implementation for simplicity ...

return "\n---\n".join(forecasts)

MCP Client

On the client side, the weather chatbot does the following things:

Step 1: Initialize a connection to the MCP server. The path to the MCP server script is provided as an input variable when start the MCP client.

async def connect_to_server(self, server_script_path: str):

"""Connect to an MCP server

Args:

server_script_path: Path to the server script (.py or .js)

"""

...

Step 2: During the connection, a list of tools is provided by the MCP server.

# List available tools

response = await self.session.list_tools()

tools = response.tools

print("\nConnected to server with tools:", [tool.name for tool in tools])

Step 3: Starting the chatbot with available tools provided by the MCP server.

async def process_query(self, query: str) -> str:

"""Process a query using Claude and available tools"""

messages = [

{

"role": "user",

"content": query

}

]

response = await self.session.list_tools()

available_tools = [{

"name": tool.name,

"description": tool.description,

"input_schema": tool.inputSchema

} for tool in response.tools]

# Initial Claude API call

response = self.anthropic.messages.create(

model="claude-3-5-sonnet-20241022",

max_tokens=1000,

messages=messages,

tools=available_tools

)

...

Certainly, since the MCP is designed by Anthropic, the definition of a tool follows Claude’s function calling format with name, description, and input_schema. If we want to implement a MCP client using OpenAI models, I guess we would have to translate the above format to OpenAI’s function calling and tool definition format with name, description, and parameters.

Run the example

Now let’s run this weather service chatbot!

(myenv) (base) xiumingliu@Xiumings-MBP mcp-client-python % python client.py ../weather-server-python/weather.py

[04/06/25 09:38:13] INFO Processing request of type ListToolsRequest server.py:534

Connected to server with tools: ['get_alerts', 'get_forecast']

MCP Client Started!

Type your queries or 'quit' to exit.

Query: Give me the weather forecast for NY

[04/06/25 09:38:55] INFO Processing request of type ListToolsRequest server.py:534

[04/06/25 09:38:58] INFO Processing request of type CallToolRequest server.py:534

[04/06/25 09:38:59] INFO HTTP Request: GET https://api.weather.gov/alerts/active/area/NY "HTTP/1.1 200 OK" _client.py:1773

Based on the provided flood advisory, here's the current weather situation for New York:

Current Conditions:

- Heavy rainfall and thunderstorms

- Between 0.5 and 1 inch of rain has already fallen

- Additional 0.5 to 1.5 inches of rain expected

Discussion

Now we have understood at least the basics about MCP. The next question: is MCP really a big thing, and should we start to adapt our agentic projects to MCP?

Here are my recommendation: Let’s closely monitor the development of MCP (and in general the standardization of LLM-based systems). But, as of now, direct function calling or frameworks such as LangChain and LlamaIndex work just fine.

The name Model Context Protocol hints Anthropic’s intention of driving standardization of how LLMs communicate with tools. This is of course an important initiative to shape the future AI ecosystem.

However, a real protocol such as TCP need to be defined by a well-recognized standardization body (such as ITU or IEEE) and widely adopted by most (if not all) of service providers. Think of today’s AI service providers such as OpenAI, Anthropic, Meta as the players in the telecom industry such as Ericsson, Nokia, and Huawei, all of the major players need to follow the same standards so that different products can talk to each other in a connected world.

There are a lot needs to be done in standardization of LLM-related systems and communications. MCP is a great initiative and the beginning of the standardization effort. But what will be the long-living “USB-C” for LLMs? Will we witness another protocol war?

Furthermore, even if MCP is the chosen one, I think there are open questions: Should the client-server constrained to 1:1 connection? Can we deploy a server in remote instead of local? How to manage authentication and determine if a client should have access to the tools provided by a server?

To conclude, let’s keep an eye on the development of MPC and in general the standardization of LLMs and agents. But maybe not worth refactoring all our agentic solutions to follow MPC just yet, if that is even possible.

References

[1] Model Context Protocol: https://modelcontextprotocol.io/introduction

[2] OpenAI Function Calling: https://platform.openai.com/docs/guides/function-calling

[3] Anthropic Claude - Tool use (function calling): https://docs.anthropic.com/en/docs/build-with-claude/tool-use/overview#specifying-tools

[4] Wikipedia - Protocol Wars: https://en.wikipedia.org/wiki/Protocol_Wars